Content

Technical causes of duplicate content

Filter functions and taxonomies

The problem with pagination

Non-technical duplicate content

Video

Technical causes for duplicate content

- Content can be accessed with and without "www"

- Content can be accessed both via "http" and via "https"

- Content can be accessed both with and without "slash"

- Content can be accessed with and without the index file displayed

- Content can also be accessed with tracking parameters

- Page can also be called up with session IDs

- Content can be called up with upper and lower case letters

There are 301 redirects for these problems. All URLs that should not be callable themselves receive a 301 redirect to the correct page. For example, if "musterseite.de" is typed in, you will be redirected via 301 to

http://www.musterseite.devia 301.

Filter functions and taxonomies

Store and blog software uses filter and sorting functions as well as taxonomies (tags) for a better user experience, which can be used to narrow down articles or products by topic or time. This allows different versions of overview pages to be created dynamically, each with similar content. The URLs usually differ by parameters. To show that these are not duplicates, there is the"canonical tag":

The canonical tag is inserted into the head of the page and tells search engines which is the original page and should be displayed in the search results. The search engine then only indexes the original page and excludes the other versions from indexing.

The problem with pagination

Pagination is used for pages with long content that is to be distributed over several individual pages. This includes long articles or overview pages of online stores. A separate title tag, descriptor etc. would have to be written for each paginated page, which is a lot of work and not always ideal for users. However, as all individual pages have the same title tag and description and therefore the same boilerplate, all individual pages cannibalize each other for the keyword.

Consequently, you would have to set the individual pages to "noindex, follow" in order to exclude duplicate content. This is not intentional, as all page content should be found in the search engine.

This problem can be avoided by using the rel="next/prev" tags in combination with the canonical tag. These tags are also integrated into the header and show the search engine the directly neighboring pages: The following would appear in the header of page 2:

refers to the original page,

stands for the previous page and

for the following page.Non-technical duplicate content

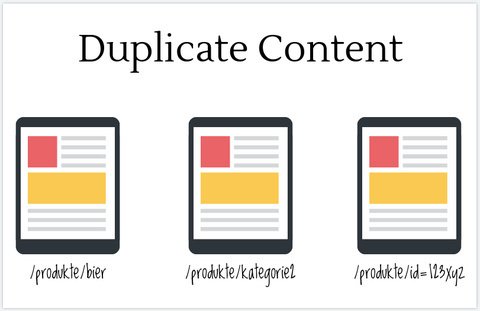

There are two possibilities here: In the first case, entire texts or text blocks appear several times on different URLs of the website(internal duplicate content). In the second case, content is copied from other websites. In some cases, this is also referred to as plagiarism. For example, texts are taken from manufacturers without revising them.

Writing texts is the most important and most difficult task in on-page optimization. You should really take the time to create added value for your visitors. Avoid copying entire paragraphs from other pages at all costs! Search engines recognize which page published the content first and will rank this page better than the duplicate pages.

Further reference:

https://webmaster-de.googleblog.com/2008/09/die-duplicate-content-penal…

The following video takes a closer look at the problem of "duplicate content":